Suppose someone asked you to answer, in one word, what an assessment should ultimately provide. What would you say? Maybe “results?” Or “direction?” Or, better yet, “truth?” Though there are no wrong answers, here at NWEA we would probably say, “clarity.” (Though “truth” is a very close second!) Why? Because without a clear picture of where a student is, determining effective next steps for growth usually means guessing. Clarity is essential. The same is true for commonly used phrases, like “norm- vs. criterion-referenced in assessment.”

We think it’s important to clarify any misinformation that’s circulating through the assessment world. The idea of “norm- vs. criterion-referenced tests” has been making the rounds once again and it’s worth setting the record straight. So let’s get right to it: The first thing you should know about this subject is there is no such thing as a “norm-referenced test.” Or a “criterion-referenced test.” And certainly not a “norm- and criterion-referenced test.”

“I think this is a really large fundamental assessment literacy piece that is very misunderstood by many, many people,” says Adam Wolfgang, director of product management at NWEA. “The idea of there being an assessment that is either criterion- or norm-referenced is a myth. The assessment itself is neither.”

The truth about all of this is that “norm-referenced” and “criterion-referenced” refer to ways to compare student scores, not tests. A norm-referenced comparison looks at a student’s performance in relation to that student’s peers while a criterion-referenced one gauges a student’s performance based on grade-level proficiency. Just about any major assessment available today uses both norm- and criterion-referenced measurements. Let’s take MAP® Growth™ as an example.

All of MAP Growth’s questions are intentionally written to be aligned to individual state standards. (For more on this, I encourage you to read our white paper “MAP Growth linking studies: Intended uses, methodology, and recent studies.”) So each question is querying if a student knows the content required for grade-level proficiency—a criterion-based measurement. The full results of the assessment (for MAP Growth, the RIT score) are then compared to students who are similar to our test taker—a norm-referenced comparison.

There is no such thing as a “norm-referenced test.” Or a “criterion-referenced test.” And certainly not a “norm- and criterion-referenced test.”

When we start looking at what is behind those comparisons, there are definitely important differences between assessments. For example, MAP Growth regularly tests the most students, so that norm-referenced comparison (between students) draws from the world’s largest pool of student data. But, again, almost every assessment out there uses both norm- and criterion-referenced measurements. Focusing on this can become a serious distraction from two much more important questions we should be asking: How much can you trust your assessment? Can your assessment effectively guide instruction? Let’s explore the answers.

MAP Growth assessments are intentionally calibrated to balance precision, the length of the assessment, and its ability to diagnose.

This balance is designed to provide the most accurate information in approximately 43 questions, or a class period. We could easily double the number of questions but have instead designed our balance in response to what educators have clearly told us: assessments need to provide accurate information within a reasonable amount of time—especially for younger students.

This balance is also important because of its precision and ability to diagnose. For example, if a student tested with MAP Growth both today and tomorrow, the results would be within three points on the RIT scale. Other tests fall in the 9–10 point range, which is a substantial enough difference to prompt incorrectly placing students in an intervention program. Educators trust MAP Growth because it’s accurate.

NWEA president Chris Minnich is known for saying, “A test alone never changed a kid’s life. It’s what happens after the test that matters.” This is the key, right?

Case studies from large and small, rural and urban districts continually show how MAP Growth informs actionable next steps. But there’s actually a deeper question here, about choice: Does your assessment let teachers use their expertise to choose what to do with the data? Or are you locked into a predetermined, one-size-fits-all path?

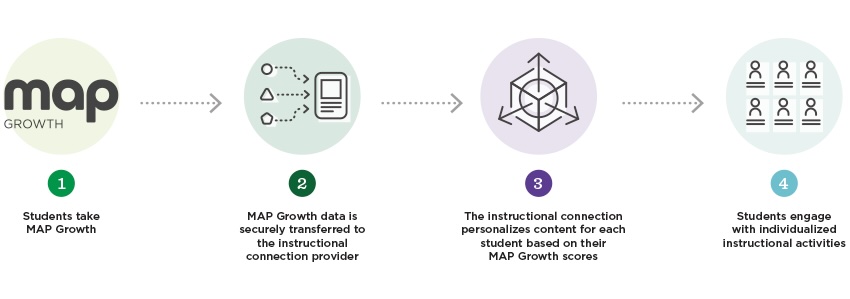

We believe in choices here, and we know our partners do, too. That’s why there is a seamless connection from MAP Growth to the widest range of learning tools from over thirty of the world’s leading instructional providers. Here’s how it works:

You know how to best meet the needs of your students. We’re here to help you get the best information to move your students forward.

Let’s finish up here with one final question: If you had only three words to describe qualities you value most in an assessment or instructional provider, what would those words be? There are no wrong answers, of course, but what we have talked about here might be pertinent:

As always, thank you so much for everything you do every day to move your students forward and prepare them for their next great adventure. And, OK, one last question that I am not even going to bother to answer because it is so obvious: Is there anything more important?